Developed a general classification and entity recognition system built on top of GenSim's word2vec pretrained word embeddings. The preprocessing step was done using careful selection of labels, zero padding, before building the model using a predefined set of hyperparameters.

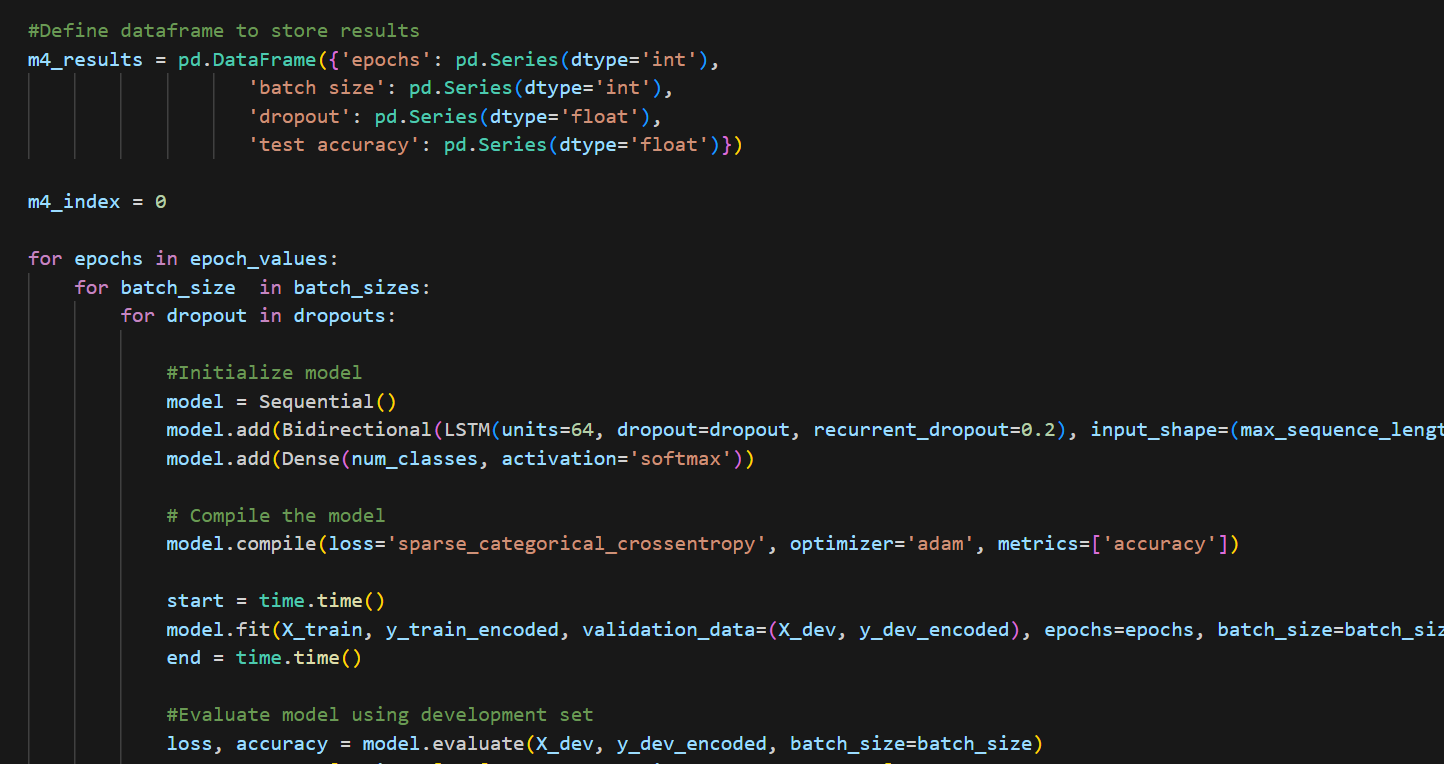

We experimented with single-layer RNNs, double layer RNNs, both un-directional and bi-directional LSTMs, and found that the prediction accuracy of the bi-directional LSTM was the highest due to it having both past and future context. For the LSTMs, we had to be careful with the number of layers and the number of units in each layer to prevent overfitting.

Several issues were also found in the implementation of the RNNs, such as exploding gradients, and we had to implement gradient clipping to prevent this.

For full implementation code, please contact me directly.

Back to Projects